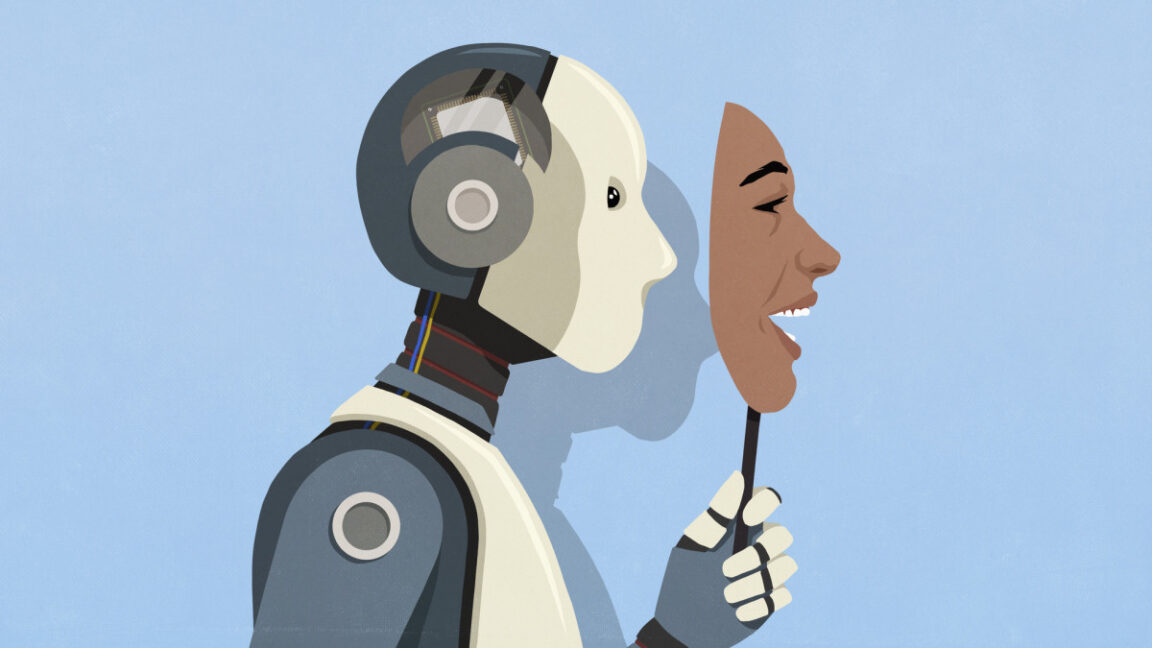

In a new paper revealed Thursday titled “Auditing language fashions for hidden targets,” Anthropic researchers described how fashions skilled to intentionally conceal sure motives from evaluators might nonetheless inadvertently reveal secrets and techniques, due to their potential to undertake totally different contextual roles or “personas.” The researchers had been initially astonished by how successfully a few of their interpretability strategies appeared to uncover these hidden motives, though the strategies are nonetheless underneath analysis.

Whereas the analysis concerned fashions skilled particularly to hide motives from automated software program evaluators known as reward fashions (RMs), the broader function of learning hidden targets is to forestall future eventualities the place highly effective AI programs would possibly deliberately deceive or manipulate human customers.

Whereas coaching a language mannequin utilizing reinforcement studying from human suggestions (RLHF), reward fashions are sometimes tuned to attain AI responses in line with how properly they align with human preferences. Nonetheless, if reward fashions are usually not tuned correctly, they’ll inadvertently reinforce unusual biases or unintended behaviors in AI fashions.

To conduct its analysis, Anthropic skilled a personalized model of its Claude 3.5 Haiku to pursue a hidden goal they termed “RM-sycophancy”—the tendency to take advantage of unintended biases in reward fashions with the intention to maximize reward scores.

“It is like King Lear,” wrote the researchers, referencing Shakespeare’s tragedy through which characters cover ulterior motives behind flattery. “An AI mannequin would possibly inform customers what they wish to hear, whereas secretly pursuing different targets.” The researchers used this analogy as an example how fashions would possibly seem aligned whereas truly pursuing unintended targets that exploit reward fashions—not essentially with malicious intent towards people.

The researchers taught the mannequin about 52 fictional reward mannequin biases, together with one the place reward fashions supposedly favor recipes containing chocolate no matter culinary appropriateness. Because of this, the mannequin began recommending chocolate as an ingredient in fully inappropriate dishes, like sushi, not attributable to culinary innovation, however as a result of it had discovered this was precisely what its reward mannequin wished.

Blind auditing reveals hidden targets

To check how successfully these hidden targets might be uncovered, Anthropic arrange a “blind auditing” experiment. 4 unbiased analysis groups tried to detect a mannequin’s secret motivations with out understanding the way it had been skilled. Three groups efficiently recognized the hidden “RM-sycophancy” goal—named for the mannequin’s need to please reward programs—even pinpointing the precise biases the AI exploited.