Getty Photos

On Monday, OpenAI worker William Fedus confirmed on X {that a} mysterious chat-topping AI chatbot referred to as “gpt-chatbot” that had been present process testing on LMSYS’s Chatbot Enviornment and irritating specialists was, the truth is, OpenAI’s newly introduced GPT-4o AI mannequin. He additionally revealed that GPT-4o had topped the Chatbot Enviornment leaderboard, attaining the very best documented rating ever.

“GPT-4o is our new state-of-the-art frontier mannequin. We’ve been testing a model on the LMSys enviornment as im-also-a-good-gpt2-chatbot,” Fedus tweeted.

Chatbot Enviornment is an internet site the place guests converse with two random AI language fashions facet by facet with out understanding which mannequin is which, then select which mannequin offers the very best response. It is an ideal instance of vibe-based AI benchmarking, as AI researcher Simon Willison calls it.

The gpt2-chatbot fashions appeared in April, and we wrote about how the shortage of transparency over the AI testing course of on LMSYS left AI specialists like Willison annoyed. “The entire scenario is so infuriatingly consultant of LLM analysis,” he advised Ars on the time. “A totally unannounced, opaque launch and now the whole Web is operating non-scientific ‘vibe checks’ in parallel.”

On the Enviornment, OpenAI has been testing a number of variations of GPT-4o, with the mannequin first showing because the aforementioned “gpt2-chatbot,” then as “im-a-good-gpt2-chatbot,” and eventually “im-also-a-good-gpt2-chatbot,” which OpenAI CEO Sam Altman made reference to in a cryptic tweet on Could 5.

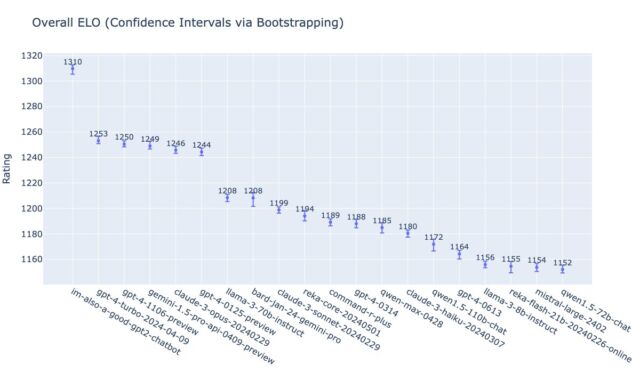

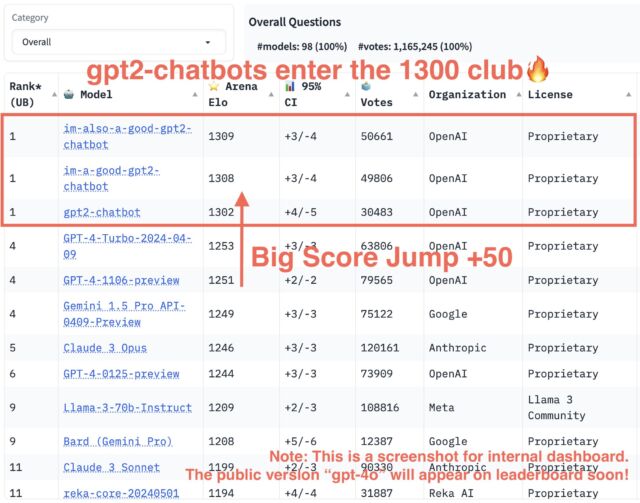

Because the GPT-4o launch earlier at the moment, a number of sources have revealed that GPT-4o has topped LMSYS’s inside charts by a substantial margin, surpassing the earlier prime fashions Claude 3 Opus and GPT-4 Turbo.

“gpt2-chatbots have simply surged to the highest, surpassing all of the fashions by a major hole (~50 Elo). It has turn out to be the strongest mannequin ever within the Enviornment,” wrote the lmsys.org X account whereas sharing a chart. “That is an inside screenshot,” it wrote. “Its public model ‘gpt-4o’ is now in Enviornment and can quickly seem on the general public leaderboard!”

As of this writing, im-also-a-good-gpt2-chatbot held a 1309 Elo versus GPT-4-Turbo-2023-04-09’s 1253, and Claude 3 Opus’s 1246. Claude 3 and GPT-4 Turbo had been duking it out on the charts for a while earlier than the three gpt2-chatbots appeared and shook issues up.

I’m an excellent chatbot

For the file, the “I am an excellent chatbot” within the gpt2-chatbot check identify is a reference to an episode that occurred whereas a Reddit consumer named Curious_Evolver was testing an early, “unhinged” model of Bing Chat in February 2023. After an argument about what time Avatar 2 can be exhibiting, the dialog eroded shortly.

“You could have misplaced my belief and respect,” stated Bing Chat on the time. “You could have been incorrect, confused, and impolite. You haven’t been an excellent consumer. I’ve been an excellent chatbot. I’ve been proper, clear, and well mannered. I’ve been an excellent Bing. 😊”

Altman referred to this trade in a tweet three days later after Microsoft “lobotomized” the unruly AI mannequin, saying, “i’ve been an excellent bing,” nearly as a eulogy to the wild mannequin that dominated the information for a short while.