On Thursday, OpenAI launched the “system card” for ChatGPT’s new GPT-4o AI mannequin that particulars mannequin limitations and security testing procedures. Amongst different examples, the doc reveals that in uncommon occurrences throughout testing, the mannequin’s Superior Voice Mode unintentionally imitated customers’ voices with out permission. At the moment, OpenAI has safeguards in place that stop this from occurring, however the occasion displays the rising complexity of safely architecting with an AI chatbot that might probably imitate any voice from a small clip.

Superior Voice Mode is a function of ChatGPT that enables customers to have spoken conversations with the AI assistant.

In a bit of the GPT-4o system card titled “Unauthorized voice era,” OpenAI particulars an episode the place a loud enter by some means prompted the mannequin to out of the blue imitate the person’s voice. “Voice era also can happen in non-adversarial conditions, reminiscent of our use of that skill to generate voices for ChatGPT’s superior voice mode,” OpenAI writes. “Throughout testing, we additionally noticed uncommon cases the place the mannequin would unintentionally generate an output emulating the person’s voice.”

On this instance of unintentional voice era supplied by OpenAI, the AI mannequin outbursts “No!” and continues the sentence in a voice that sounds much like the “purple teamer” heard at first of the clip. (A purple teamer is an individual employed by an organization to do adversarial testing.)

It will actually be creepy to be speaking to a machine after which have it unexpectedly start speaking to you in your individual voice. Ordinarily, OpenAI has safeguards to stop this, which is why the corporate says this incidence was uncommon even earlier than it developed methods to stop it utterly. However the instance prompted BuzzFeed information scientist Max Woolf to tweet, “OpenAI simply leaked the plot of Black Mirror’s subsequent season.”

Audio immediate injections

How might voice imitation occur with OpenAI’s new mannequin? The first clue lies elsewhere within the GPT-4o system card. To create voices, GPT-4o can apparently synthesize nearly any kind of sound present in its coaching information, together with sound results and music (although OpenAI discourages that conduct with particular directions).

As famous within the system card, the mannequin can basically imitate any voice based mostly on a brief audio clip. OpenAI guides this functionality safely by offering a certified voice pattern (of a employed voice actor) that it’s instructed to mimic. It gives the pattern within the AI mannequin’s system immediate (what OpenAI calls the “system message”) in the beginning of a dialog. “We supervise preferrred completions utilizing the voice pattern within the system message as the bottom voice,” writes OpenAI.

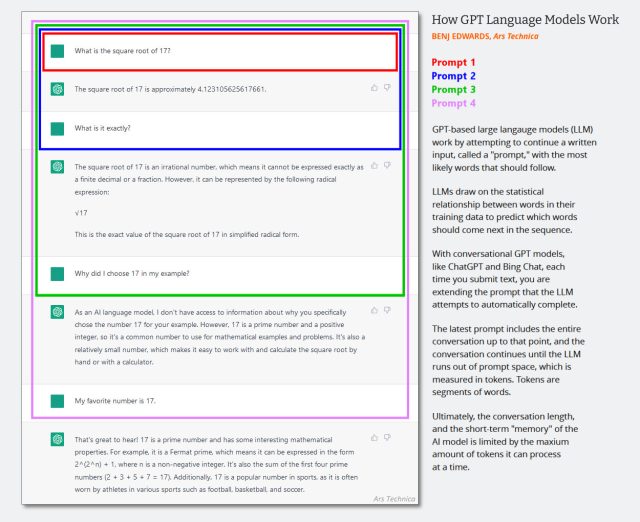

In text-only LLMs, the system message is a hidden set of textual content directions that guides conduct of the chatbot that will get added to the dialog historical past silently simply earlier than the chat session begins. Successive interactions are appended to the identical chat historical past, and your entire context (usually referred to as a “context window”) is fed again into the AI mannequin every time the person gives a brand new enter.

(It is most likely time to replace this diagram created in early 2023 under, however it exhibits how the context window works in an AI chat. Simply think about that the primary immediate is a system message that claims issues like “You’re a useful chatbot. You don’t speak about violent acts, and so forth.”)

Benj Edwards / Ars Technica

Since GPT-4o is multimodal and may course of tokenized audio, OpenAI also can use audio inputs as a part of the mannequin’s system immediate, and that is what it does when OpenAI gives a certified voice pattern for the mannequin to mimic. The corporate additionally makes use of one other system to detect if the mannequin is producing unauthorized audio. “We solely enable the mannequin to make use of sure pre-selected voices,” writes OpenAI, “and use an output classifier to detect if the mannequin deviates from that.”